|

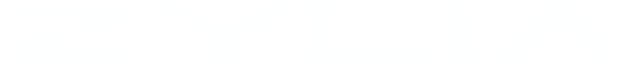

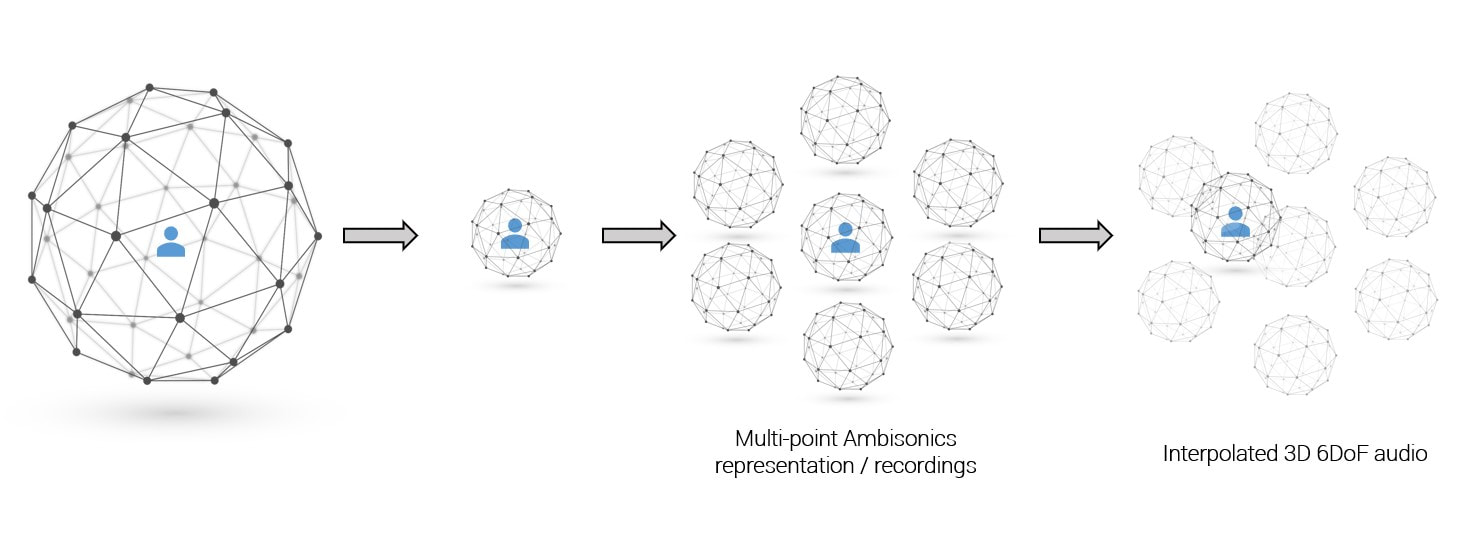

Volumetric audio recording is a technique that captures sound in a way that mimics how our ears perceive sound in the real world. It captures not only the level of sound (loudness), but also the location and movement of sound sources in a room or environment. This allows for a more immersive listening experience, as the listener can perceive the sounds as if they were actually in the room where the recording was made. There are a few different methods used to achieve volumetric audio recording. One of them is to use a grid of multiple Ambisonics microphone arrays to capture sound from different angles and positions. Additionally, the 6 degrees of freedom (6DoF) higher-order Ambisonics (HOA) rendering approach to volumetric audio recording allows the listener to move freely around the recorded space, experiencing the sound as if they were actually present in the environment.

The six degrees of freedom refer to the three linear (x, y, z) and three angular (pitch, yaw, roll) movements that can be made in a 3D space. In the context of volumetric audio recording, these movements correspond to the listener's position and orientation in the space and the position and movement of sound sources. The resulting audio can be played back through surround sound systems or virtual reality technology to create a sense of immersion and realism. Volumetric audio recording and 6dof rendering approach is used in a variety of applications, such as virtual reality, gaming, film, television and interactive audio installations, where the listener's movement and orientation play an important role in the experience. It also allows for a more immersive and realistic listening experience, as the listener can move around the space and hear the sound change accordingly, just as they would in the real world.

0 Comments

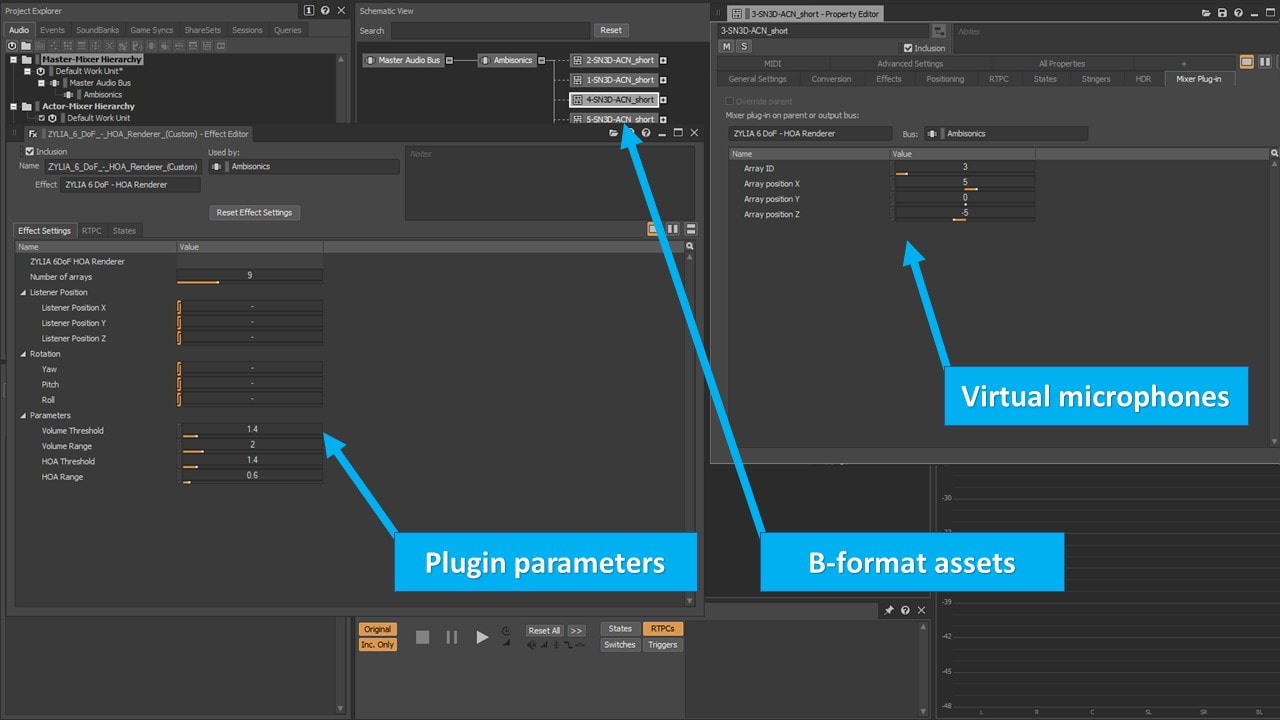

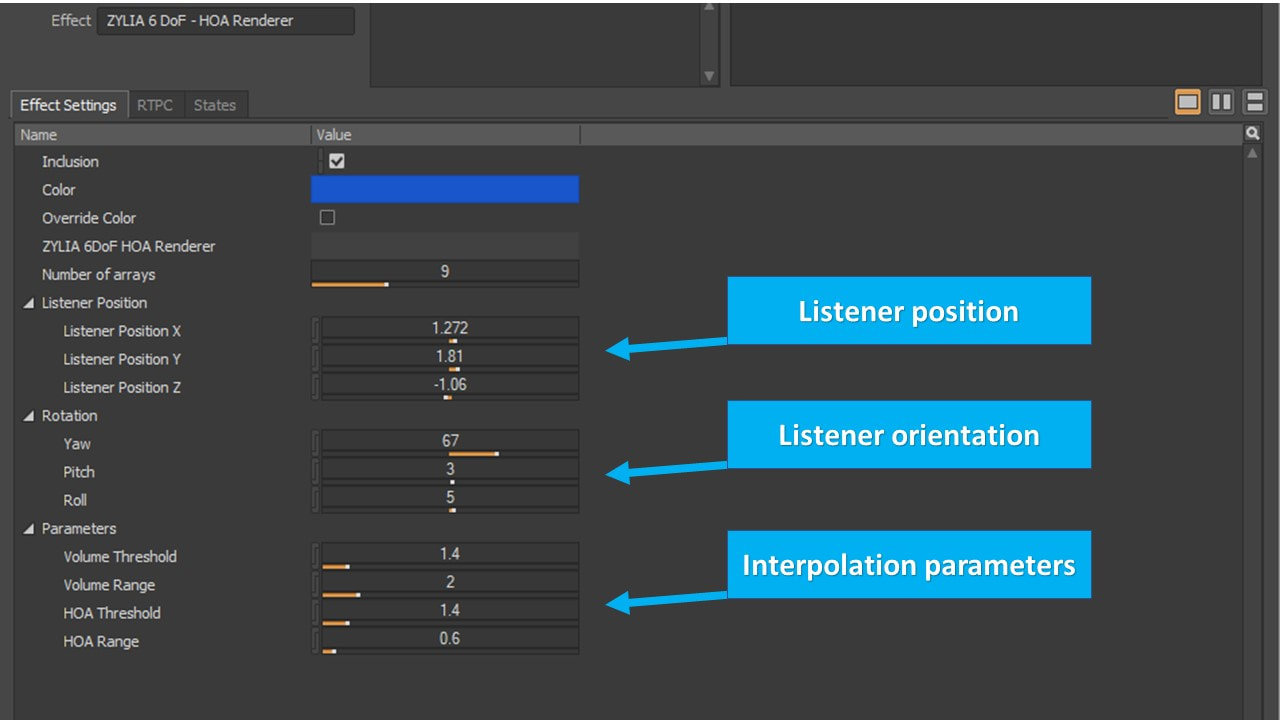

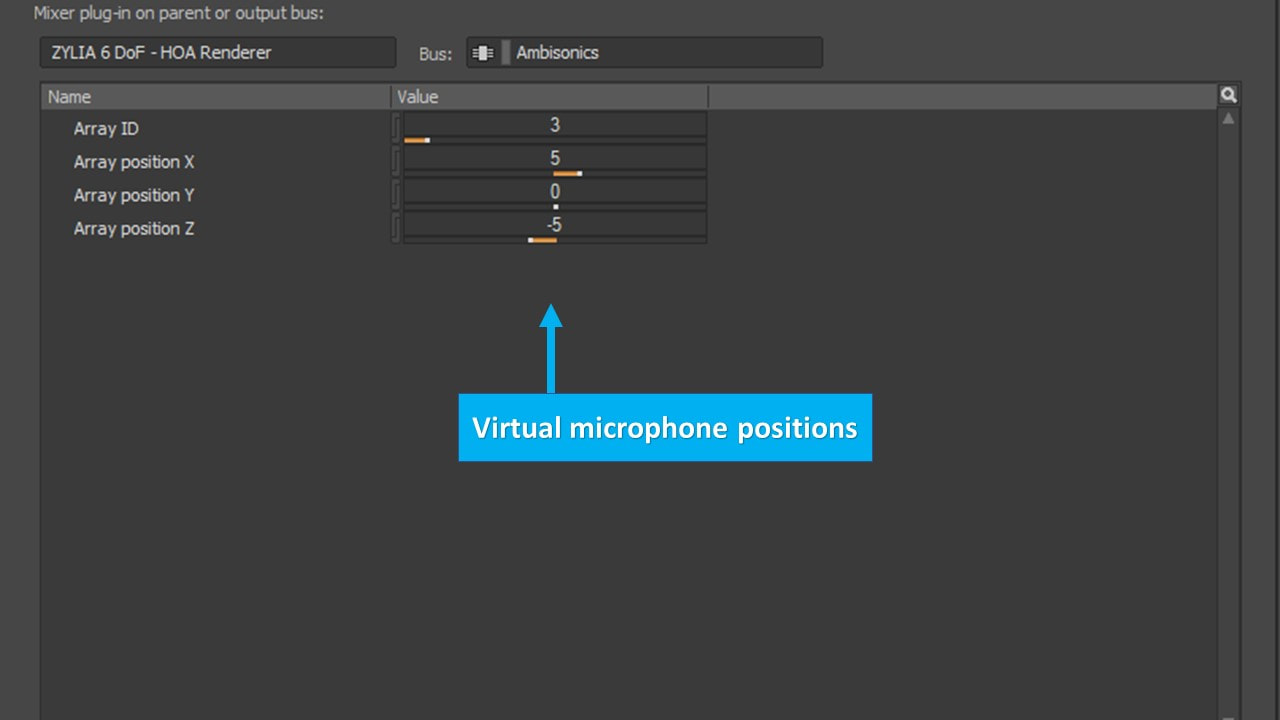

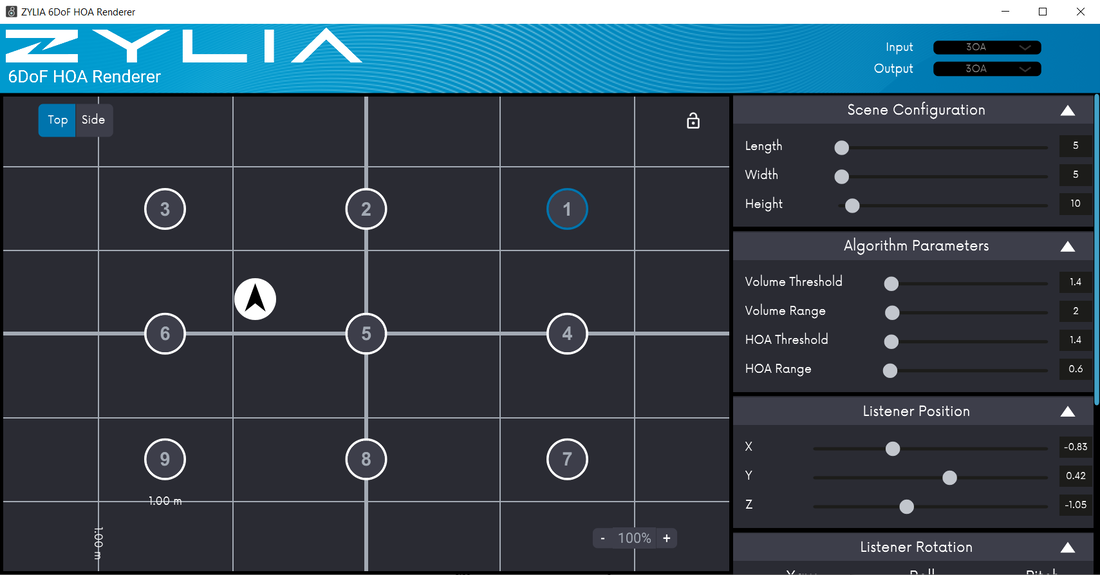

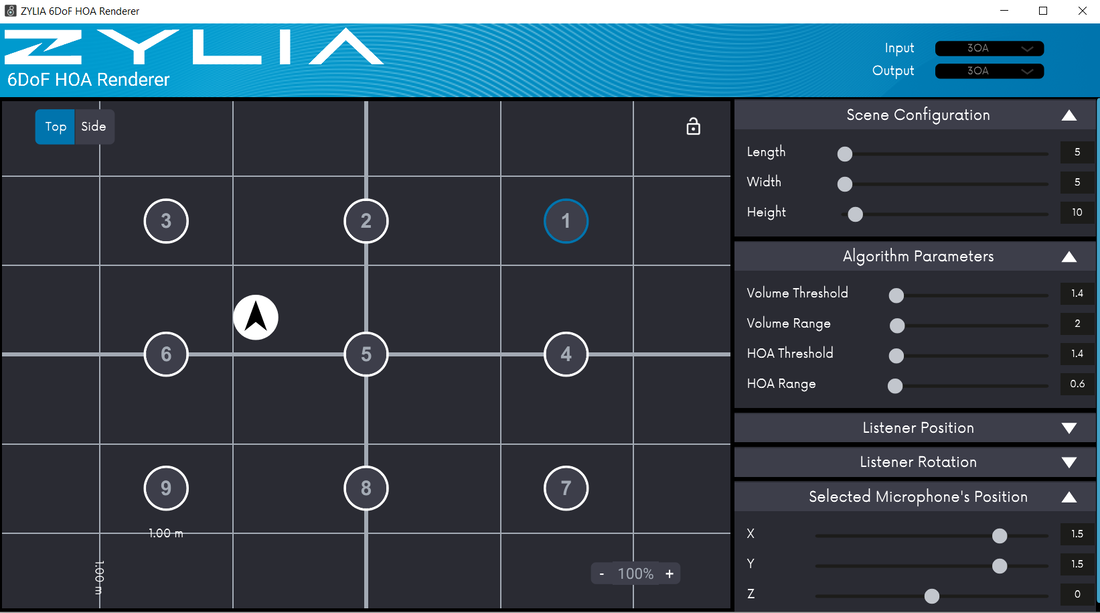

We are happy to announce the release of the ZYLIA 6DoF HOA Renderer for Wwise v1.1. This software is a key element of the ZYLIA 6DoF Navigable Audio system and is agnostic with respect to media sources. It allows you to reproduce immersive sound fields in any position by interpolating between adjacent higher-order Ambisonics signals. These higher-order Ambisonic signals can be recorded or synthetic immersive media assets. ZYLIA 6DoF Ambisonic interpolation offers immersive audio experiences with minimal demands on CPU processing. A Wwise plugin is a tool ready for your VR and game projects. Main features of the plugin

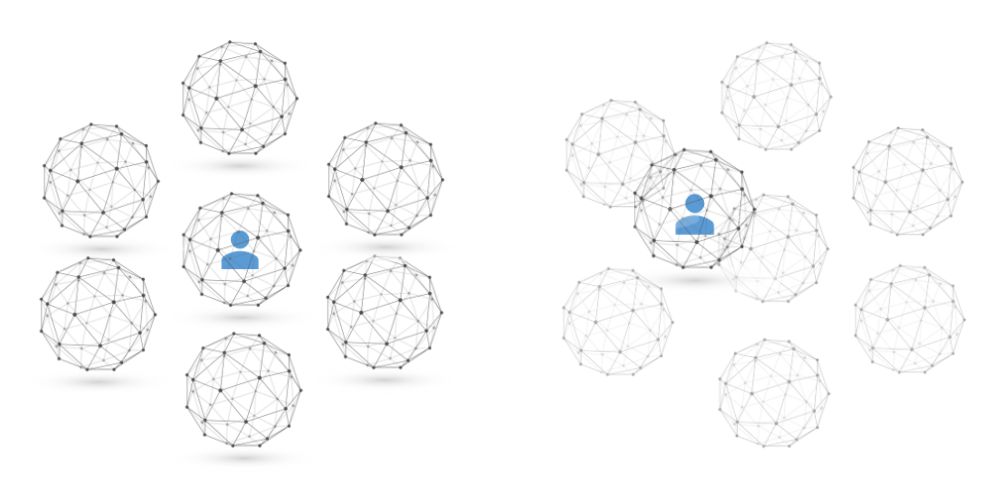

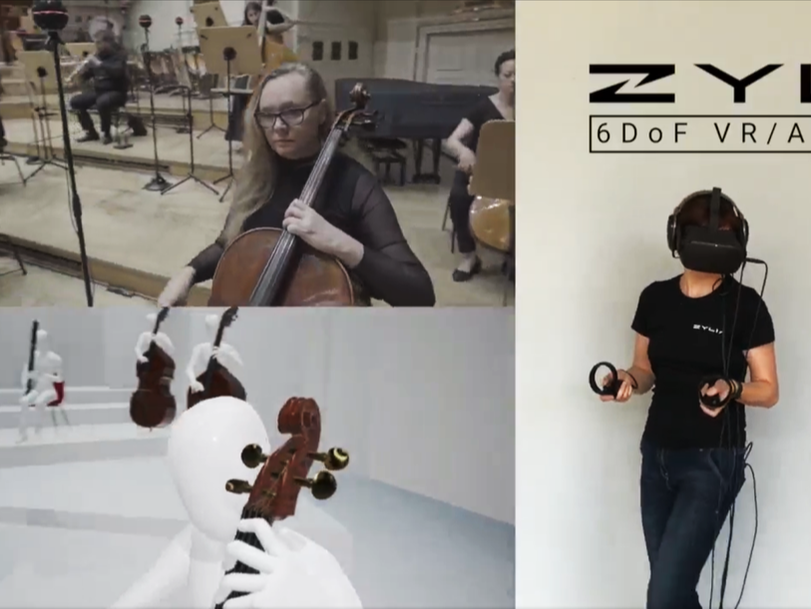

QUATRE : un projet immersif reliant des paysages sonores naturels et de la musique enregistrée en ambisonie multipoint d’ordre élevé.Last year, our creative sound engineer Florian Grond had the privilege to accompany Christophe Papadimitriou during the production of QUATRE, an immersive work of audio art connecting soundscapes with music and improvisation. The music was recorded with ZYLIA’s 6DoF VR/AR set in the studios of the Orford Music centre in Quebec, Canada and the nature soundscapes were recorded with a single ZYLIA ZM-1 microphone outdoors, the whole composition was mixed and mastered as a binaural album. Christophe was so kind to grant us an interview and to speak about what moves him as an artist and what inspired him to embark on this immersive audio journey. L'année dernière, notre ingénieur du son créatif Florian Grond a eu le privilège d'accompagner Christophe Papadimitriou lors de la production de QUATRE, une œuvre d'art audio immersive reliant les paysages sonores à la musique et à l'improvisation. La musique a été enregistrée avec l'ensemble 6DoF de ZYLIA dans les studios du centre musique Orford au Québec, Canada, et les paysages sonores de la nature ont été enregistrés avec un seul ZYLIA ZM-1 microphone en extérieur, l'ensemble de la composition a été mixé et masterisé comme un album binaural. Christophe a eu la gentillesse de nous donner une interview et de parler de ce qui le touche en tant qu'artiste et de ce qui l'a inspiré à se lancer dans ce projet audio immersive. Photo: The 3 musicians of the project QUATRE recording in multipoint higher-order Ambisonics with ZYLIA’s 6DoF system: from left to right Luzio Altobelli, Christophe Papadimitriou, and Omar-Abou Afach. Photo: Les 3 musiciens du projet QUATRE enregistrant en ambisonie d'ordre supérieur en multipoint avec le système 6DoF de ZYLIA : de gauche à droite Luzio Altobelli, Christophe Papadimitriou, et Omar-Abou Afach. Zylia: Hello Christophe, tell us about yourself and your artistic journey? Bonjour Christophe, parlez-nous de vous et de votre parcours artistique ? Christophe Papadimitriou: I arrived in Quebec from France in 1978. I live in Montreal and I have performed as a musician ever since I graduated from Concordia University in 1992. There, I studied double bass with the great jazz musician Don Habib and classical music with Éric Lagacé. Since then, I have shared the stage with many artists from Montreal, especially from the jazz and world music scene as well as with chanson artists. For the past 10 years, I have been lucky enough to be able to play and record my own compositions for theatre projects as well as for jazz and world music productions, which is what I like most! I love composing and sharing with the public the fruit of my creativity and my imagination. Je suis arrivé au Québec de France en 1978 et je vis à Montréal où je travaille comme musicien depuis ma sortie de l’Université Concordia en 1992. J’y ai étudié la contrebasse avec les grands professeurs et artistes jazz et classiques Don Habib et Éric Lagacé. Depuis, j’ai partagé la scène avec de nombreux artistes montréalais, surtout en jazz, musique du monde et chanson. Depuis les derniers 10 ans, j’ai la chance de pouvoir jouer et enregistrer mes propres compositions au sein de projets autant en théâtre, jazz et musique du monde. C’est ce qui me plait le plus, j'adore composer et partager avec le public le fruit de ma créativité et de mon imagination. Photo: Christophe Papadimitriou recording a typical Quebec summer sound scape with the ZYLIA ZM-1 microphone. Photo: Christophe Papadimitriou enregistre une paysage sonore typique de l'été québécois avec le ZM-1 microphone de ZYLIA. Zylia: As a double bass player and musician, which are your favorite audiences? En tant que contrebassiste et musicien, quels sont vos publics préférés ? Christophe Papadimitriou: I am lucky to be able to work a lot and to have the opportunity to perform on stage, about a hundred performances a year. This direct contact with the public is so important for me, since it means that I can get an immediate response from the audience. This is true especially with young audiences, who are very expressive and do not censor themselves. I like it when music provokes strong sensations and I also enjoy taking risks, especially during moments of improvisation, where I particularly feel alive as musician and experience a unique and intense moment with the public. J’ai la chance de travailler beaucoup et l’opportunité de me produire sur scène, environ une centaine de représentations par an. Ce contact avec le public est le plus important pour moi, car j’ai une réponse immédiate de la part du public. Notamment avec les jeunes, qui sont très expressifs et n’ont pas de censure. J’aime quand la musique provoque des sensations fortes et j’adore aussi la prise de risques, notamment lors de moments improvisés, ou les musiciens vivent, en même temps que le public, un moment unique et intense. Zylia: Now here comes a big question, what is the meaning of music and art for you? Maintenant, voici une grande question, quelle est la signification de la musique et de l'art pour vous ? Christophe Papadimitriou: For me, music is the most faithful way to express emotions and inner feelings. Transcending words and images, music allows musicians and audiences to connect and to share simultaneously both collective and individual experiences. When the magic works and the musicians and the audience are transported by this positive energy, then we can experience a moment of grace… These moments are rare and for that, very precious. Pour moi, la musique est le moyen d’exprimer le plus fidèlement les émotions et l'intériorité. Transcendant les mots et les images, la musique permet aux musiciens et à l’auditoire de se connecter ensemble et de vivre simultanément une expérience à la fois collective et individuelle. Quand la magie opère et que musiciens et audience sont transportés par cette énergie positive, alors nous pouvons vivre un moment de grâce…Ces moments sont rares et pour ça, très précieux. Photo: Recording the soundscape of a winter night with the ZYLIA ZM-1 mic at the Orford music centre Photo: Enregistrement du paysage sonore d'une nuit d'hiver avec ZM-1 mic de ZYLIA au centre musique Orford Zylia: You created QUATRE, what motivated you to embark on this project? Vous avez créé QUATRE, qu’est-ce qui vous a motivé à vous lancer dans ce projet ? Christophe Papadimitriou: The Covid pandemic had a significant impact on many levels on our society and forces us to reconsider, if this was not already the case, the connection we have between us and with the planet. Questioning human activity and our impact on nature became an absolute priority for me. In general, this realization has an impact on the arts and it became clear to me that this would be the material for my next project. This is how the idea came about to create a work based on the cycle of the seasons with the desire to reconnect with the nature that surrounds us. With this work, I want to create a dialogue between music and the sounds of nature, a moment of personal and quasi-meditative respite where the listener is invited to encounter nature in a different way and to recreate a link with what is precious. La pandémie de Covid a eu un impact important sur plusieurs plans de notre société et nous oblige à prendre conscience, si ce n’était pas déjà le cas, de notre interconnexion au niveau planétaire. Une remise en question de l’activité humaine et de notre impact sur la nature sont des enjeux absolument prioritaires. Cette prise de conscience a naturellement un impact sur l’art en général et pour moi, il est devenu évident que ce serait la matière mon prochain projet. C’est ainsi qu’est née l’idée de créer une œuvre basée sur le cycle des saisons avec la volonté de reconnecter avec la nature qui nous entoure. Je voulais par cette œuvre créer l’effet d’un dialogue entre musique et sons de la nature, un moment de répit personnel et quasi-méditatif où l’auditeur est invité à rencontrer autrement la nature et à recréer du lien avec le précieux. Zylia: Who are the other musicians in QUATRE? Qui sont les autres musiciens de QUATRE ? Christophe Papadimitriou: Key contributors to the project are Luzio Altobelli (accordion and marimba), Omar Abou Afach (viola, oud and nai) and Florian Grond (sound design). In addition to the great mastery of their instruments, Luzio and Omar bring their sensitivity and humanity to each piece of the work. It was very interesting to observe during the production of Quatre (which took place over more than a year), how the group dynamic evolved over the seasons. Our cultural differences and also the points that bring us together. All this is reflected in the recorded music. We can hear moments of great unity and also solos where the instrumentalists can let themselves go to follow their own vision, to express their feeling of the moment. Quatre is a work with written pieces but it also leaves plenty of room for improvisation. Thus, the musicians were able to express themselves in their own musical language which enriched the work with various traditional timbres (Middle East, Mediterranean and Quebec). Collaborateurs essentiels au projet : Luzio Altobelli (accordéon et marimba), Omar Abou Afach (alto, oud et nai) et Florian Grond (design sonore). En plus de leur évidente maîtrise instrumentale, Luzio et Omar apportent leur sensibilité et leur humanité dans chaque pièce de l'œuvre. Il a été très intéressant de constater pendant la réalisation de Quatre (qui s'est déroulée sur plus d’un an), la dynamique de groupe qui évoluait au fil des saisons. Nos différences culturelles et aussi les points qui nous rassemblent. Tout ceci se perçoit dans la musique enregistrée. On peut entendre des moments d’une grande unité et aussi des solos ou l’instrumentiste peut se laisser aller à sa propre vision, son ressenti du moment. Quatre est une œuvre avec des pièces écrites mais qui laisse toujours une bonne place à l'improvisation. Ainsi, les musiciens ont pu s’exprimer dans leur propre langage et l’œuvre est riche de sonorités diverses (Moyen Orient, Méditerranée et Québec). Photo: In order to share the project with various communities, QUATRE organises binaural listening sessions indoors and outdoors. Up to 12 people can connect with headphones for an individual and yet collective experience of the work. Photo: Afin de partager le projet avec diverses communautés, QUATRE organise des sessions d'écoute binaurale en intérieur et en extérieur. Jusqu'à 12 personnes peuvent se connecter avec des écouteurs pour une expérience individuelle et pourtant collective de l'œuvre. Zylia: QUATRE was realized as a multipoint higher order Ambisonics recording using ZYLIA’s 6DoF set, what is the appeal of immersive audio for you? QUATRE a été réalisé comme un enregistrement en ambisonie d'ordre supérieur avec multiple point de captation avec le set ZYLIA 6DoF, quel est l'intérêt de l'audio immersif pour vous ? Christophe Papadimitriou: During an improvised COVID concert outdoors on my terrace, Florian recorded us for the first time, starting with a single ZM-1, which was when I discovered immersive audio recording technology. A bird was singing along while we played, and I was immediately taken by the possibility of connecting my music with the sounds of nature and treating it like a 4th instrument. It is through this technology that we can really feel surrounded by the sound universe that we have created. Quatre is conceived as a journey, a walk, through Quebec's nature through the seasons. To support this feeling, we have integrated footstep sounds (in the snow, in the sand, in the water) in each season to help the listener to imagine herself walking in the forest or more generally in nature. The feedback we got from listeners show that the immersive effect was quite a success. Pendant un concert Covid improvisé sur ma terrasse, Florian nous a enregistré pour la première fois, d’abord avec un seul ZM-1, c’était là que j’ai découvert la technologie d’enregistrement en audio immersif. Un oiseau chantait le long de notre jeu et j’ai été charmé par la possibilité de connecter ma musique avec les sons de la nature, et de traiter celle-ci comme un 4e instrument. C’est cette technologie qui nous a permis de réellement se sentir entouré par l’univers sonore ainsi créé. Quatre est conçue comme un voyage dans la nature québécoise au fil des saisons. D’ailleurs, nous avons intégré à chaque saison des sons de pas (dans la neige, dans le sable, dans l’eau) qui aident l’auditeur à se projeter en train de se promener en forêt ou plus général en nature. Les témoignages que nous avons reçus après l’écoute montrent que l’effet d’immersion est bien réussi. Zylia: Also, you had it mastered as a binaural piece for headphones, tell us why? Aussi, vous l'avez fait masteriser en tant que pièce binaurale pour casque, dites-nous pourquoi ? Christophe Papadimitriou: I am convinced that listening through headphones offers the best conditions for total listener presence. The work has many subtleties and the dynamic range of the sound is great, going from very soft to loud, so headphones are necessary to appreciate every detail. Today, a lot of people listen to music through headphones, but often while multitasking at the same time. To fully enjoy the music of Quatre, we suggest a moment of respite where the audience, comfortably seated, is completely dedicated to the act of listening. We are currently in the process of setting up several collective listening sessions with headphones in the Montreal area. These sessions will accommodate about fifteen listeners at a time and are created with the aim of discovering this work and providing an experience connecting art, nature and humans. Nous sommes convaincus que l’écoute au casque offre les meilleures conditions pour une présence totale de l’auditeur. L'œuvre a beaucoup de subtilités et l'amplitude sonore est grande, passant de très doux à fort, le casque est nécessaire pour ne rien manquer. Beaucoup de gens écoutent leur musique au casque de nos jours, mais souvent en faisant une autre tâche en même temps. Pour profiter de l’expérience au maximum, nous privilégions un moment de répit ou l’auditeur, confortablement installé, se dédie complètement à l’écoute. Nous sommes en train de mettre en place plusieurs séances d’écoute collectives au casque, dans la région de Montréal. Ces séances permettant une quinzaine d'auditeurs à la fois sont créées dans le but de faire découvrir cette œuvre et de faire vivre une expérience connectant art, nature et humains. Support our approach and the artists by downloading Quatre here: Appuyez notre démarche et supportez les artistes en téléchargeant Quatre ici : https://christophepapadimitriou.bandcamp.com/album/quatre-sur-le-chemin Want to learn more about multi-point HOA music and soundscape recordings? Contact our Sales team:The idea In July 2021, Jordan Rudess (Dream Theater) and his friends Steve Horelick and Jerry Marotta gathered at the Dreamland Recording Studios, NY, for a spectacular and singular concert event, spanning classical to impressionistic piano genres to progressive, experimental rock with spacious synth soundscapes. The team at Zylia captured the performance with multiple 360° cameras and 3rd order Ambisonics microphones, allowing the creation of a unique, immersive 3D journey. Zylia’s 3D Audio recording solution gives artists a unique opportunity to connect intimately with the audience. Thanks to the Oculus VR application, the viewer can experience each uniquely developed element of the performance at home as vividly as it would be by a live audience member. Using Oculus VR goggles and headphones, viewers have the opportunity to:

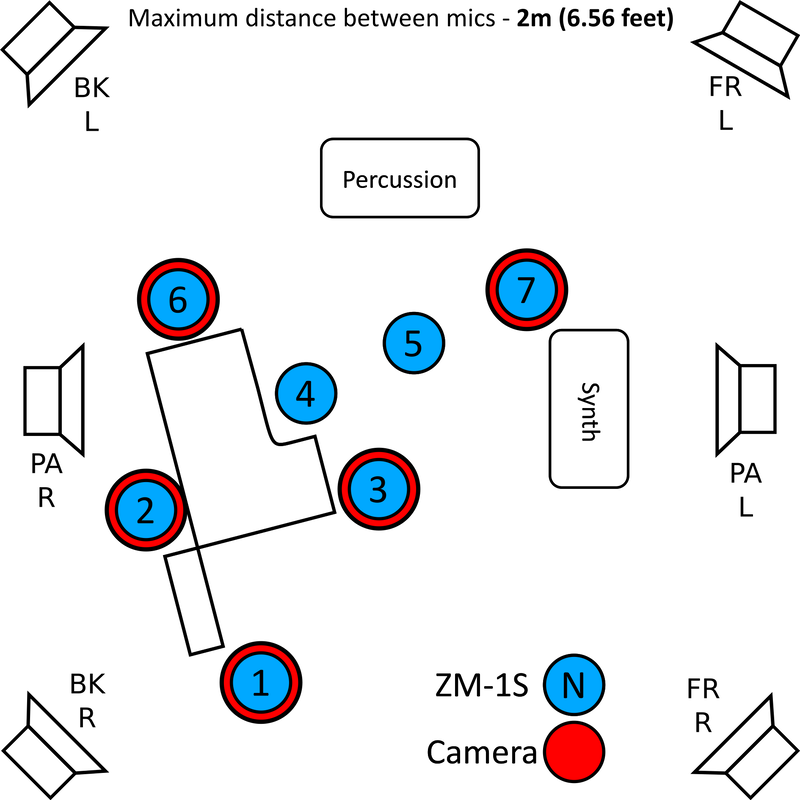

Technical information To create a multi-point 360° audio-video experience, we set up a recording spots on the stage amongst the musicians – each equipped with a ZYLIA ZM-1S and a 360° camera. This allows a listener to choose from which point they watch the concert and experience it in immersive 360°. With every move, the sound projected to the listener’s ears changes, corresponding to the position of their head. To combine 360° video with 360° audio, we used a video editor (Adobe Premiere), a Digital Audio Workstation supporting 19-channel tracks (Reaper) as well as Unity3D and Wwise engines. The equipment used for the recording of “Jordan Rudess & Friends” concert

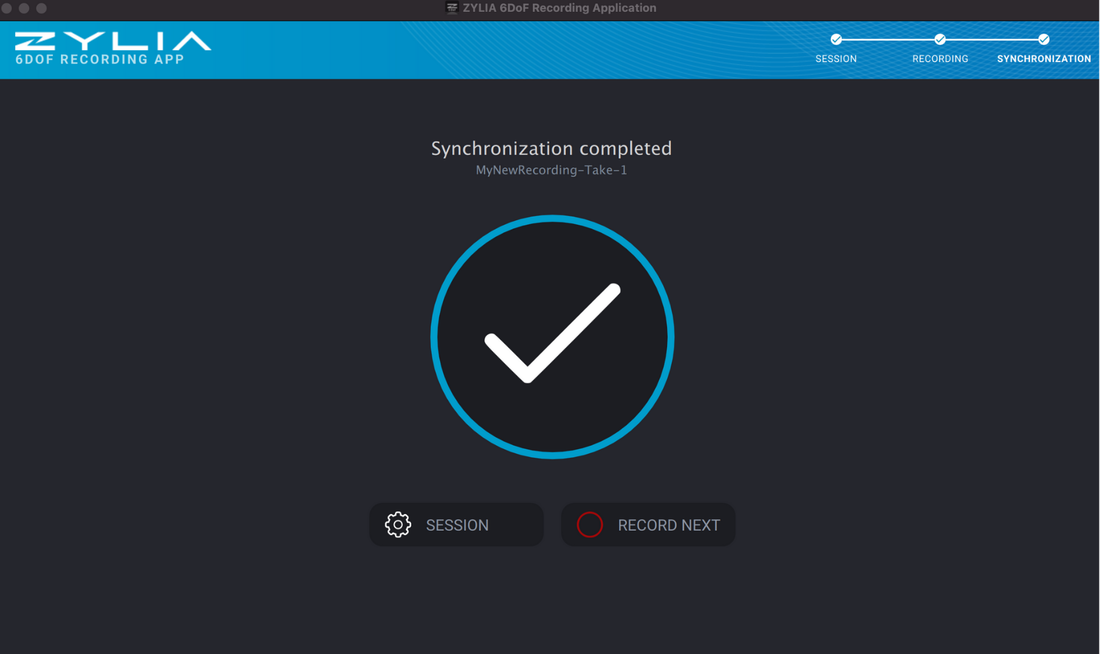

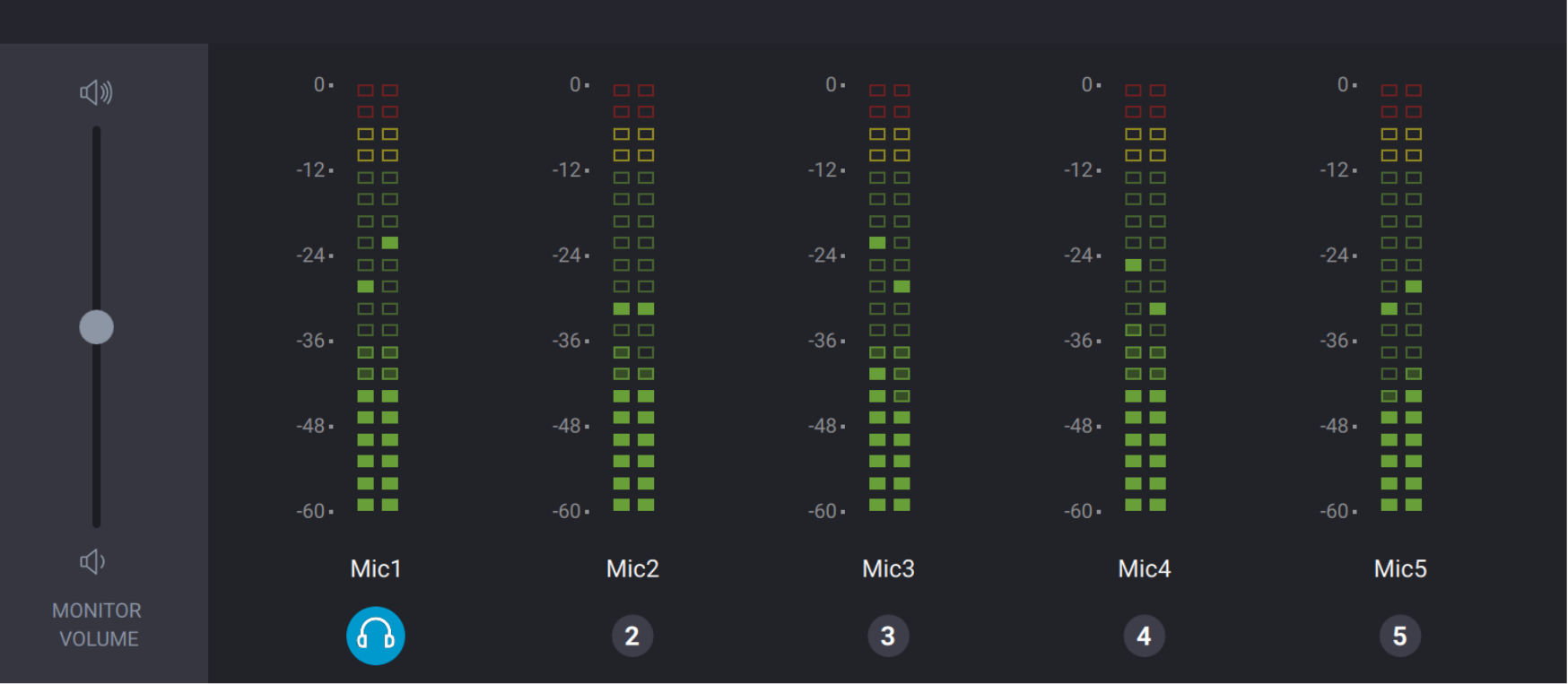

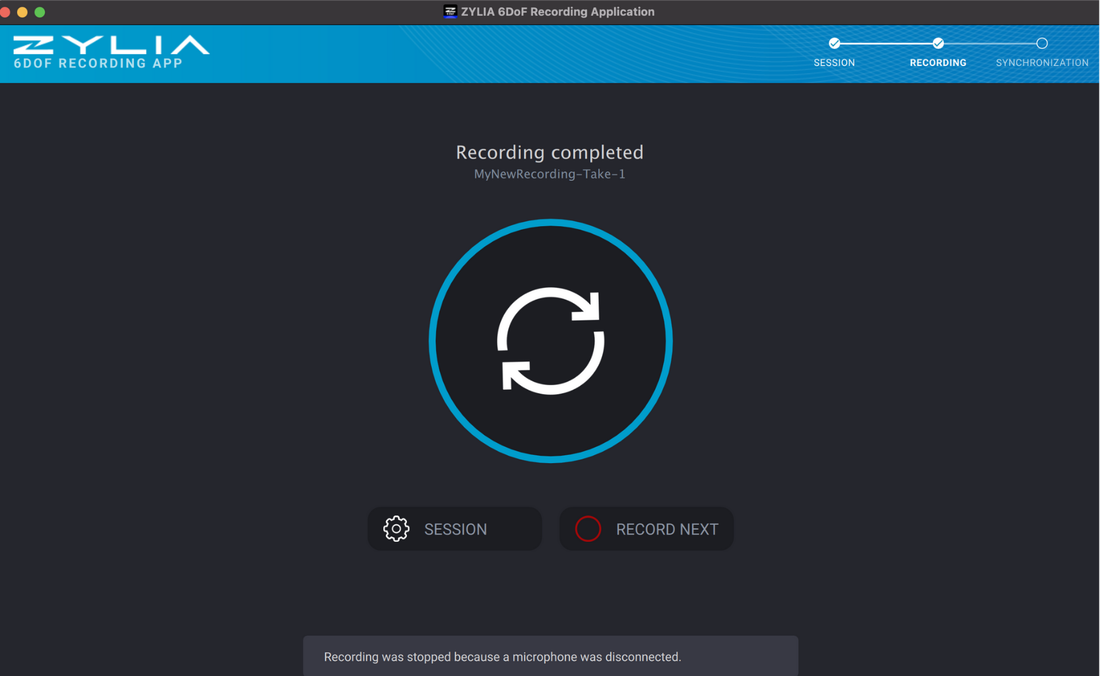

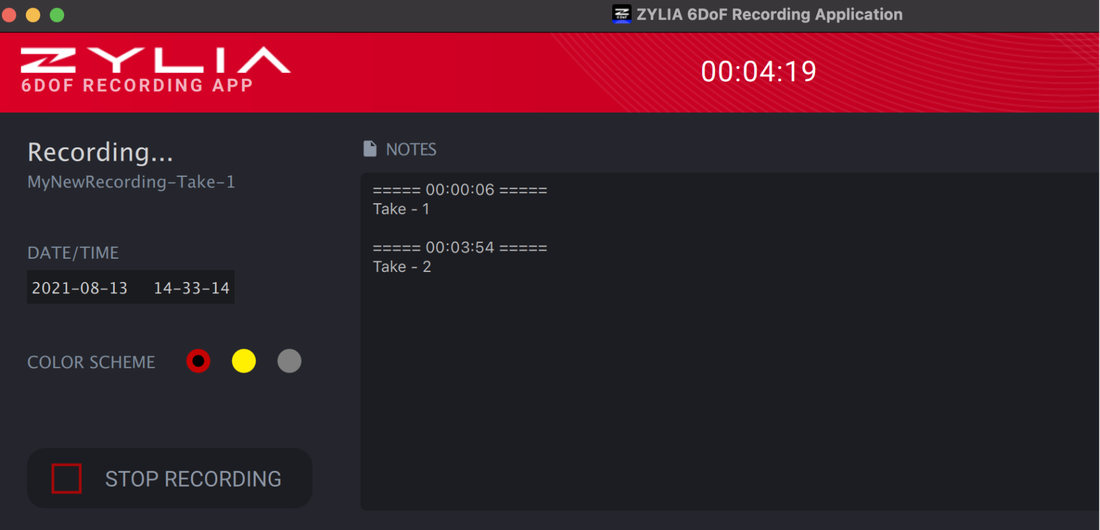

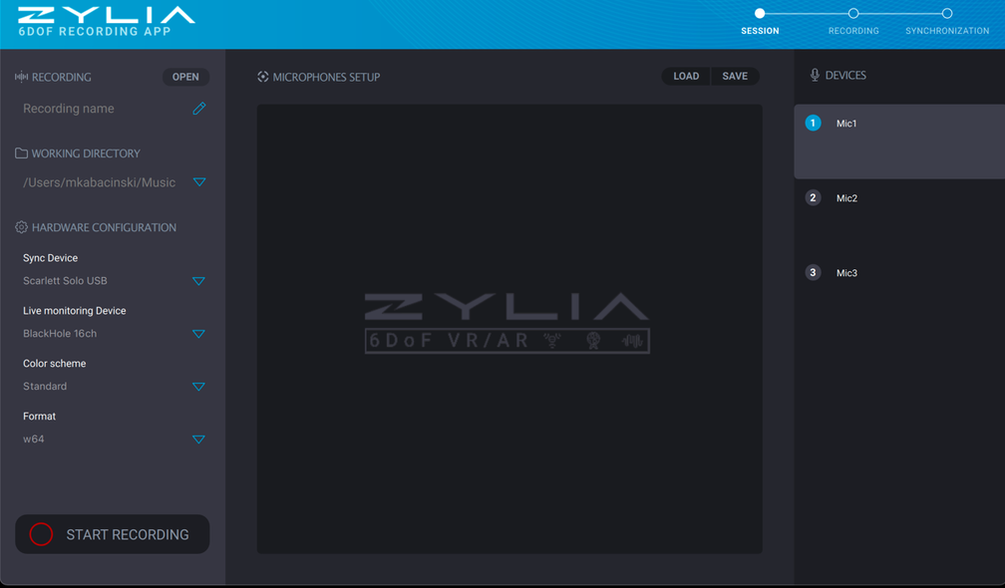

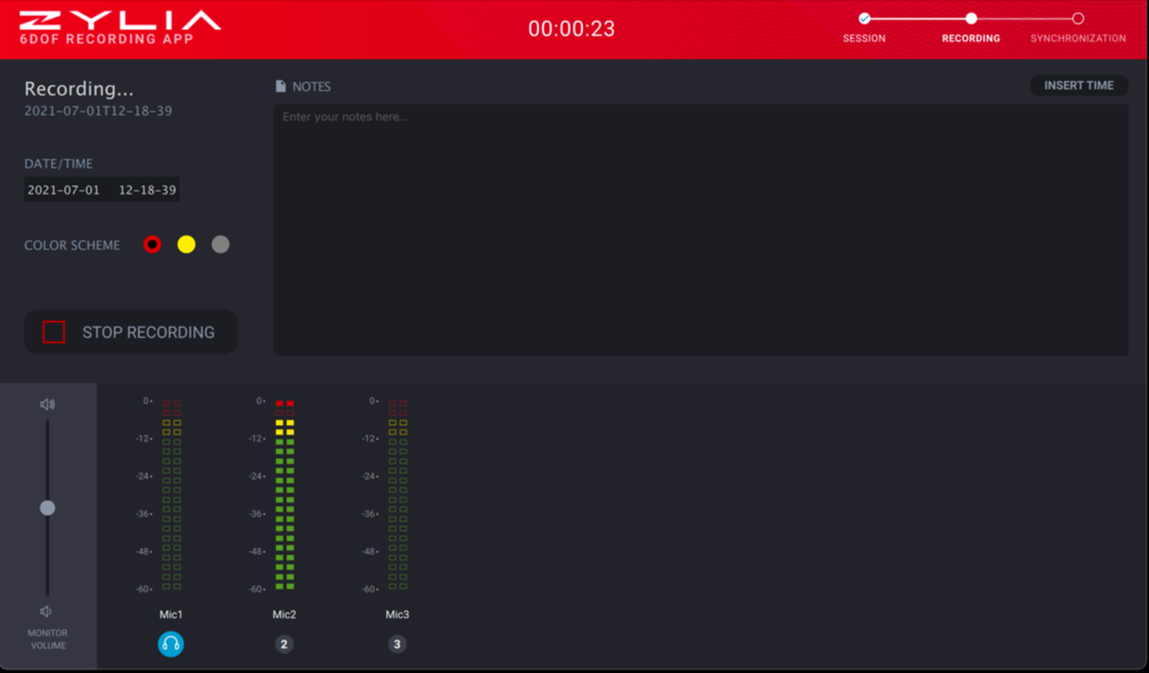

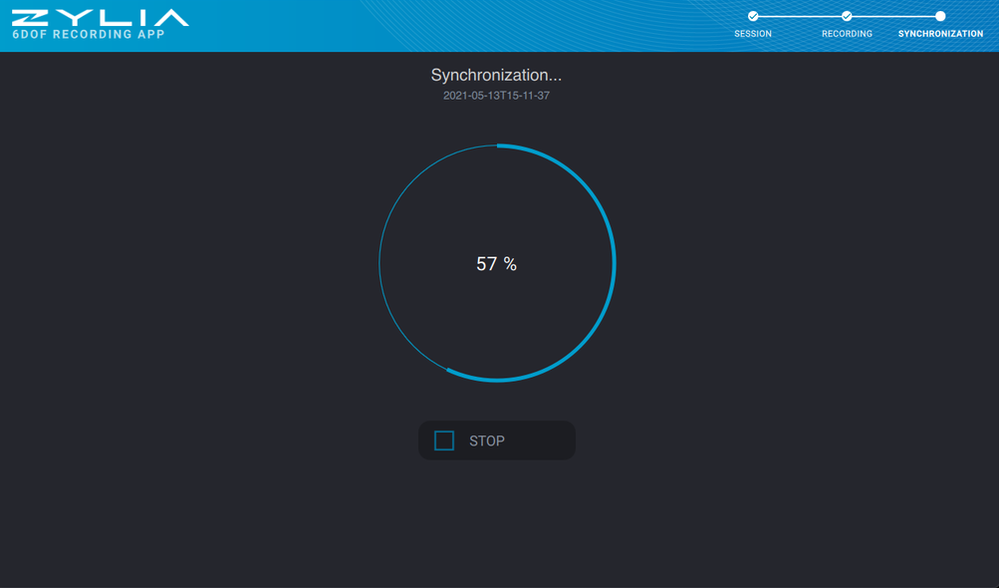

Microphone array placement Recording process Recording was done using ZYLIA 6DoF Recording Application. Qoocam 8K cameras were used for video recording. Data processing/production pipeline A RAW signal from microphones was synchronized using ZYLIA 6DoF Recording Application and converted to 3rd order Ambisonics format. Output Ambisonics signals were mixed in DearVR software. The 360° videos from the Qoocam 8K were stitched and converted to an equirectangular video format. For the purpose of distributing the concert, a VR application was created in Unity3D and Wwise engines for Oculus Quest VR goggles. Multi-point 360° audio and video – the outcome The outcome of this project is an Oculus application with the concert “Jordan Rudess & Friends in 3D Audio” – an immersive, deep, and emotionally engaging music experience. Want to learn more about multi-point 360 audio and video productions? Contact our Sales team:RECORDING MULTIPOINT 3D AUDIO HAS NEVER BEEN EASIER! ZYLIA 6DoF RECORDING APPLICATION V.1.0.08/20/2021 Tiger Woods once said that no matter how good you get, you can always get better, and that's the exciting part. Here at Zylia, we couldn’t agree more, therefore, we are thrilled to present you a new, and more importantly, improved version of our ZYLIA 6DoF Recording Application. Intuitiveness, efficiency, and a great look that’s how in a few words we can describe the ZYLIA 6DoF Recording Application, which we released just recently. Let's take a closer look at it together! For those, a little less familiar with our products – ZYLIA 6DoF Recording Application is a part of the ZYLIA 6 Degrees of Freedom Navigable Audio solution, which is the most advanced technology on the market for recording and post-processing multipoint 3D audio. The solution is based on multiple Higher Order Ambisonics microphones which capture large sound-scenes in high spatial resolution and a set of software for recording, synchronizing signals, converting audio to B-Format, and rendering HOA files. This new ZYLIA 6DoF Recording Application has replaced the command-line toolkit for recording and synchronization. Thanks to a clear and comfortable graphical user interface, working with the app is fast and easy. We’ve also added a bunch of new features, which significantly improved the user experience. GET OFF TO A GOOD STARTZYLIA 6DoF Recording Application was designed as a tool for capturing 3D audio content from multiple ZYLIA ZM-1S microphones (up to 3000 channels on a single computer). To make the entire process smoother, we’ve introduced a set of very useful options:

BETTER THAN EVER Although the command-line toolkit did its job very well, our drive to excellence and constantly paying attention to the opinions of our customers resulted in developing a new, better version of the Recording Application. During the optimization, not only we kept the old, proven features but also added new ones and took care of the visual aspect of the interface. Here are all the great improvements we introduced:

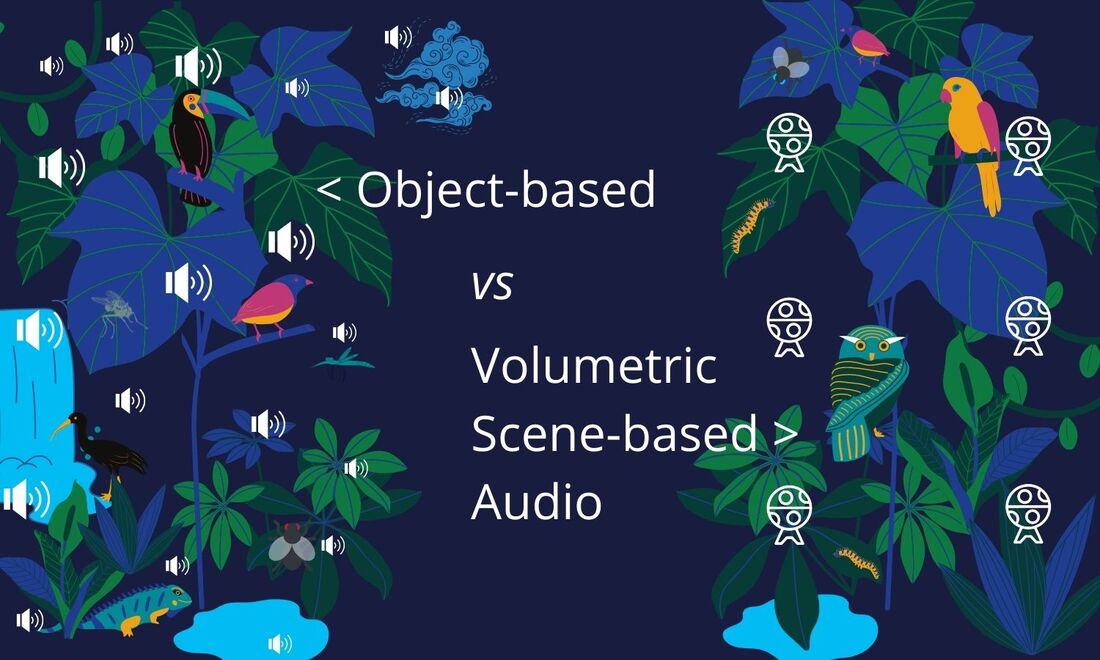

THE ICING ON THE CAKE While working on the new ZYLIA 6DoF Recording Application, we have not forgotten to make the interface aesthetically pleasing and familiar to our users, granting great usability and a joyful experience. See for yourself: Efficient Volumetric Scene-based audio with ZYLIA 6 Degrees of Freedom solutionWhat is the difference between Object-based audio (OBA) and Volumetric Scene-based audio (VSBA)?OBA The most popular method of producing a soundtrack for games is known as Object-based audio. In this technique, the entire audio consists of individual sound assets with metadata describing their relationships and associations. Rendering these sound assets on the user's device means assembling these objects (sound + metadata) to create an overall user experience. The rendering of objects is flexible and responsive to the user, environmental, and platform-specific factors [ref.]. In practice, if an audio designer wants to create an ambient for an adventure in a jungle, he or she needs to use several individual sound objects, for example, the wind rustling through the trees, sounds of wild animals, the sound of a waterfall, the buzzing of mosquitoes, etc. The complexity associated with Object-based renderings increases with the number of sound objects. This means that the more individual objects there are (the more complex the audio scene is) the higher is the usage of the CPU (and hence power consumption) which can be problematic in the case of mobile devices or limitations of the bandwidth during data transmission. VSBA A complementary approach for games is Volumetric Scene-based audio, especially if the goal is to achieve natural behavior of the sound (reflections, diffraction). VSBA is a set of 3D sound technologies based on Higher-Order Ambisonics (HOA), a format for the modeling of 3D audio fields defined on the surface of a sphere. It allows for accurate capturing, efficient delivery, and compelling reproduction of 3D sound fields on any device (headphones, loudspeakers, etc.). VSBA and HOA are deeply interrelated; therefore, these two terms are often used interchangeably. Higher-Order Ambisonics is an ideal format for productions that involve large numbers of audio sources, typically held in many stems. While transmitting all these sources plus meta-information may be prohibitive as OBA, the Volumetric Scene-based approach limits the number of PCM (Pulse-Code Modulation) channels transmitted to the end-user as compact HOA signals [ref.]. ZYLIAs interpolation algorithm for 6DoF 3D audio Creating a sound ambience for an adventure in a jungle through Volumetric Scene-based audio, can be as simple as taking multiple HOA microphones to the natural environment that produces the desired soundscape and record an entire 360° audio-sphere around devices. The main advantage of this approach is that the complexity of the VSBA rendering will not increase with the number of objects. This is because the source signals are converted to a fixed number of HOA signals, uniquely dependent on the HOA order, and not on the number of objects present in the scene. This is in contrast with OBA, where rendering complexity increases as the number of objects increases. Note that Object-based audio scenes can profit from this advantage by converting them to HOA signals i.e., Volumetric Scene-based audio assets. To summarizing, the advantages of the Volumetric Scene-based audio approach affecting the CPU and power consumption are:

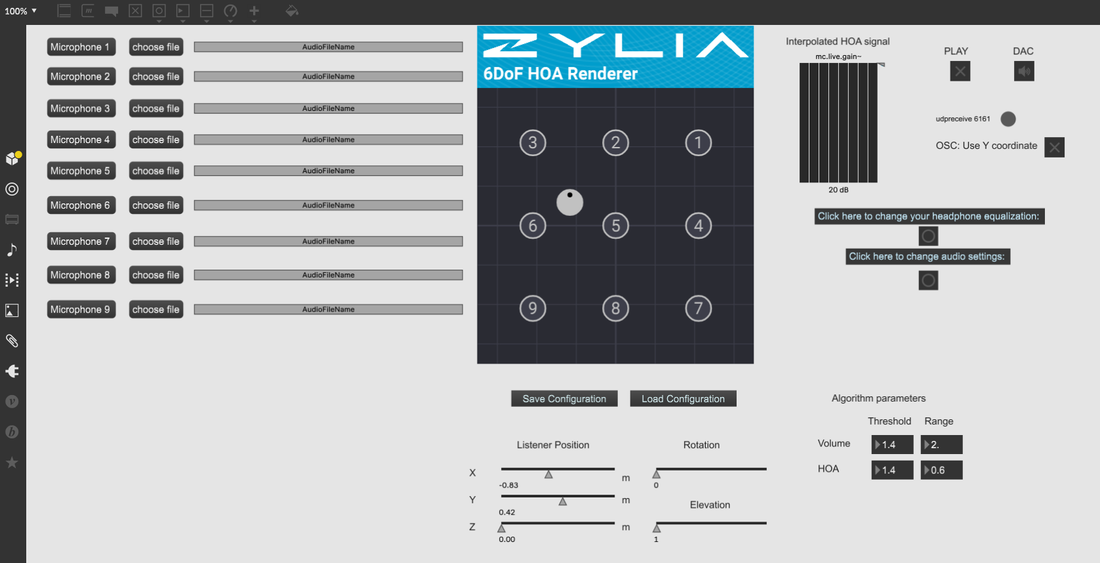

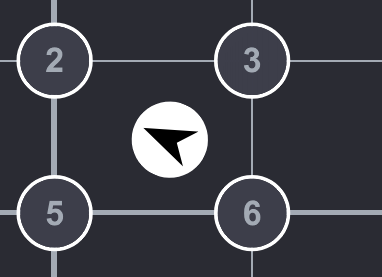

Zylia 6 Degrees of Freedom Navigable Audio One of the most innovative and efficient tools for producing Volumetric Scene-based audio is ZYLIA 6 Degrees of Freedom Navigable Audio solution. It is based on several Higher Order Ambisonics microphones which capture large sound-scenes in high resolution, and a set of software for recording, synchronizing signals, converting audio to B-Format, and rendering HOA files. The Renderer can be also used independently from the 6DoF hardware – to create navigable 3D assets for audio game design. ZYLIA 6 DoF HOA Renderer is a MAX/MSP plugin available for MAC OS and Windows. It allows processing and rendering ZYLIA Navigable 3D Audio content. With this plugin users can playback the synchronized Ambisonics files, change the listener’s position, and interpolate multiple Ambisonics spheres. The plugin is also available for Wwise, allowing developers to use ZYLIA Navigable Audio technology in various game engines. Watch the comparison between Object-based audio and Volumetric Scene-based audio produced with Zylia 6 Degrees of Freedom Navigable Audio solution. Notice how the 6DoF approach reduces the CPU during sound rendering. Volumetric Scene-based audio and Higher Order Ambisonics can be used for many different purposes, not only for creating soundtracks for games. This format is very efficient when producing audio for:

#zylia #gameaudio #6dof #objectbased #scenebased #audio #volumetric #gamedevelopment #GameDevelopersConference #GDC2021 #GDC Want to learn more about multi-point 360 audio and video productions? Contact our Sales team:We are happy to announce the new release of the ZYLIA 6DoF Recording Application in version 1.0.0 for Linux and macOS. This application is a part of the ZYLIA 6DoF Navigable Audio solution (ZYLIA 6DoF VR/AR set). It replaces the command line toolkit for the recording and synchronization process. For your comfort, this application has a graphical user interface, so there is no need to use the command line anymore. This application offers all features of the ZYLIA 6DoF Recording Toolkit such as:

Additionally, there are added few new features.

Configure you session. Make the recording. Synchronize raw audio files. The command-line application will be also available but will not be further developed.

We are happy to announce the release of ZYLIA 6DoF HOA Renderer for Max MSP v2.0. (macOS, Windows). This software is a key element of ZYLIA 6DoF Navigable Audio system. It allows you to reproduce the sound field in a given location based on Ambisonics signals recorded with ZM-1S microphones. The plugin works in Max/MSP environment so you can use this tool directly in your project. Please refer to the provided example project with our plugin and the manual for ZYLIA 6DoF HOA Renderer. The newest ZYLIA 6DoF HOA Renderer for Max/MSP has a lot of improvements. The new User Interface can be accessed in a separate window – it allows you to set up the whole configuration directly in the plugin, without using the message mechanism of Max/MSP. The UI allows you also to lock the microphones’ positions, preventing the unintentional change of the scene configuration.

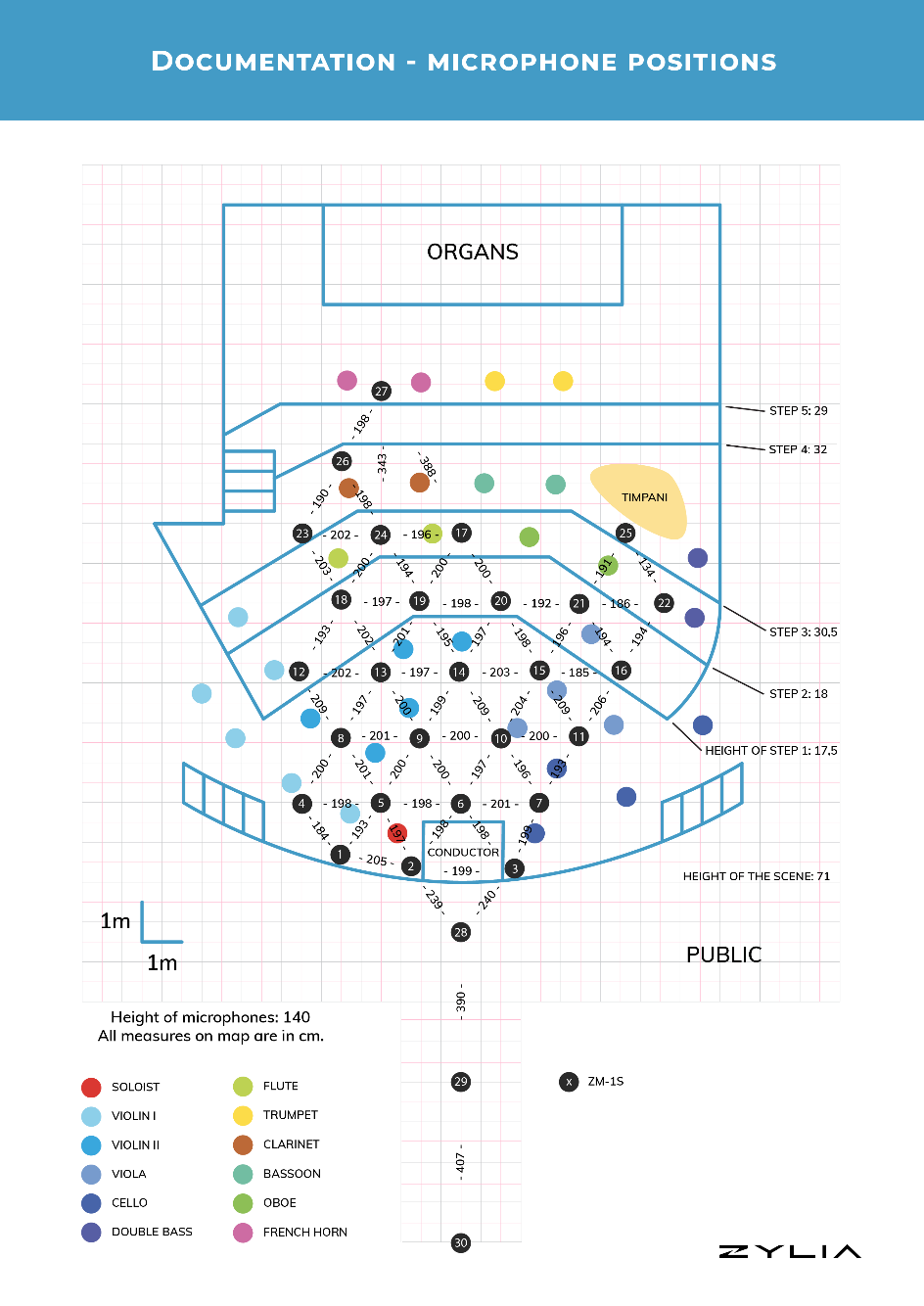

The next thing is an increased number of supported signals, right now you can pass up to 30 HOA signals to the plugin, and create a 6DoF experience on much larger scenes. If you would like to test this plugin, you can also use a 7-day free trial, and play around with our 6DoF audio rendering algorithm. The test recording data for the plugin can be found on our webpage. The ideaZylia in collaboration with Poznań Philharmonic Orchestra showed first in the world navigable audio in a live-recorded performance of a large classical orchestra. 34 musicians on stage and 30 ZYLIA 3’rd order Ambisonics microphones allowed to create a virtual concert hall, where each listener can enact their own audio path and get a real being-there sound experience. ZYLIA 6 Degrees of Freedom Navigable Audio is a solution based on Ambisonics technology that allows recording an entire sound field around and within any performance imaginable. For a common listener it means that while listening to a live-recorded concert they can walk through the audio space freely. For instance, they can approach the stage, or even step on the stage to stand next to the musician. At every point, the sound they hear will be a bit different, as in real life. Right now, this is the only technology like that in the world. 6 Degrees of Freedom in Zylia’s solution name refers to 6 directions of possible movement: up and down, left and right, forward and backward, rotation left and right, tilting forward and backward, rolling sideways. In post-production, the exact positions of microphones placed in the concert hall are being mirrored in the virtual space through the ZYLIA software. When it is done, the listener can create their own audio path moving in the 6 directions mentioned above and choose any listening spot they want. Technical information6DoF sound can be produced with an object-based approach – by placing pre-recorded mono or stereo files in a virtual space and then rendering the paths and reflections of each wave in this synthetic environment. Our approach, on the contrary, uses multiple Ambisonics microphones – this allows us to capture sound in almost every place in the room simultaneously. Thus, it provides a 6DoF sound which is comprised only of real-life recorded audio in a real acoustic environment. How was it recorded? * Two MacBooks pro for recording * A single PC Linux workstation serving as a backup for recordings * 30 ZM-1S mics – 3rd order Ambisonics microphones with synchronization * 600 audio channels – 20 channels from each ZM-1S mic multiplied by 30 units * 3 hours of recordings, 700 GB of audio data Microphone array placementThe placement of 30 ZM-1S microphones on the stage and in front of it.

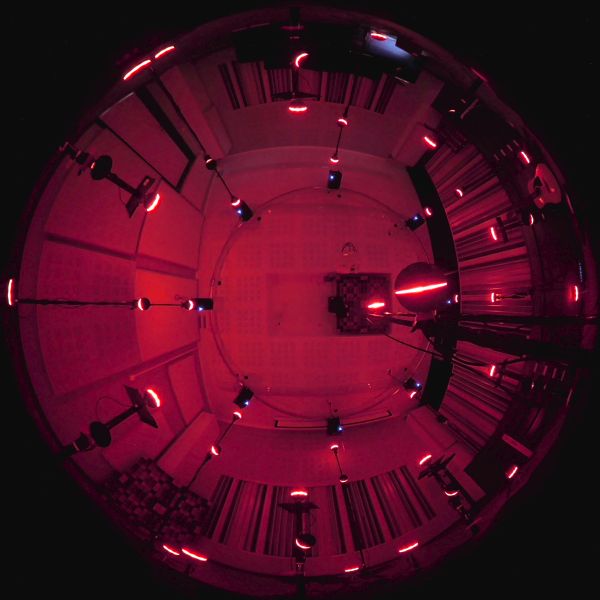

Recording processTo be able to choose the best versions of performances, the Orchestra played nine times the Overture and eight times the Aria with three additional overdubs. Simultaneously to the audio recording, we were capturing the video to document the event. The film crew placed four static cameras in front of the stage and on the balconies. One cameraman was moving along the pre-planned path on the stage. Additionally, we have put two 360 degrees cameras among musicians. Our chief recording engineer made sure that everything was ready – static cameras, moving camera operator, 360 cameras and recording engineers – and then gave a sign to the Conductor to begin the performance. When the LED rings on the 30 arrays had turned red everybody knew that the recording has started. DataA large amount of data make it possible to explore the same moment in endless ways. Recording all 19 takes of two music pieces resulted in storing 700 GB of audio. The entire recording and preparation process was documented by the film with several cameras. Around 650 GB of the video has been captured. In total, we have gathered almost 1,5 TB of data. Post-processing and preparing data for the ZYLIA 6DoF rendererFirst, we had to prepare the 3D model of the stage. The model of the concert hall was redesigned, to match the dimensions in real life. Then, we have placed the microphones and musicians according to the accurate measurements. When this was done, specific parameters of the interpolation algorithm in the ZYLIA 6DoF HOA Renderer had to be set. The next task was the most difficult in post-production - matching the real camera sequences with the sequences from the VR environment in Unreal Engine. After this painstaking process of matching the paths of virtual and real cameras, a connection between Unreal and Wwise was established. In this way, we had the possibility to render the sound of the defined path in Unreal - just as if someone was walking there in VR. Last, but not least - was to synchronize and connect the real and virtual video with the desired audio. The outcomeThe outcome of this project is presented in “The Walk Through The Music” movie, where we can enter the music spectacle from the audience position and move around artists on the stage. You can also watch the “Making of” movie to get more detailed information on how the setup looked like. Want to learn more about volumetric audio recording? Contact our experts:Zylia introduced a six-degrees-of-freedom (6DoF) multi-level microphone arrays installation for navigable live recorded audio.What does it mean? We are working on technology that gives people the possibility to listen to a concert or live performance from any point in the audio scene. With our technology you are able to record an audio scene from different points of the space – the center of the stage, from the middle of a string quartet, audience, or backstage. Audio recorded in such a way can be used together with virtual reality projections and allow the user to freely move around the space giving the natural experience of audio scene and the possibility to listen to it from different perspectives. 6DoF installation and test setup The first step of test recordings was to install 9 3rd order Ambisonics microphone arrays on the same level and record musicians playing their performance. Such an approach allowed the listener to move around those 9 points and listen to their music from different perspectives. However, microphones placement on a single level introduced limitations in terms of audio resolution in the vertical plane. Since we like challenges we decided to increase the number of microphones to 53 and build an installation on five different levels. It allowed us to freely move in every direction of the recorded scene in a truly immersive experience. The second idea behind this test setup was to check the limits of Ambisonics recordings in order to achieve a fully navigable audio scene. We placed the microphones arrays densely in the recorded scene and we received a spatial audio image of very high resolution. Technical aspects: We used 53 19-channel mic arrays – which gave us 1007 audio channels recorded simultaneously. Microphones were connected to a USB hub and the recordings were operated via a single laptop. The audio recorded from each microphone array was converted to 3rd order Ambisonics using our ZYLIA Ambisonics Converter plugin (it can be done in real-time or offline). After the recording, we used our interpolation software. This software is a MaxMSP plugin, that generates 3rd order Ambisonics spheres based on the signal from all microphones in the position you are at the moment. When you put your headphones and VR headset and move around the space the algorithm in MaxMSP takes your position and interpolates 3D sound in the position you are at the moment. We used 3rd order Ambisonics microphone arrays. It is important because the higher the order the more precision we get in the spatial localization of sound around the listener. We are able to recreate the sound with a very high spatial resolution which influences the audio quality - an extremely important aspect for listeners. With this simple approach, you can record the natural audio scene for your VR/AR productions and use it right away without complicated work-flow in post-production. You can record live events and stream audio directly to the listener giving him the possibility to freely choose the position in this real-time recorded space for an ultimate immersive audio experience. Application: Cinematic trailers for VR, audio for games, live performances recording, domes with multi-loudspeakers installations

|

Categories

All

Archives

August 2023

|

|

© Zylia Sp. z o.o., copyright 2018. ALL RIGHTS RESERVED.

|

RSS Feed

RSS Feed