Benefits

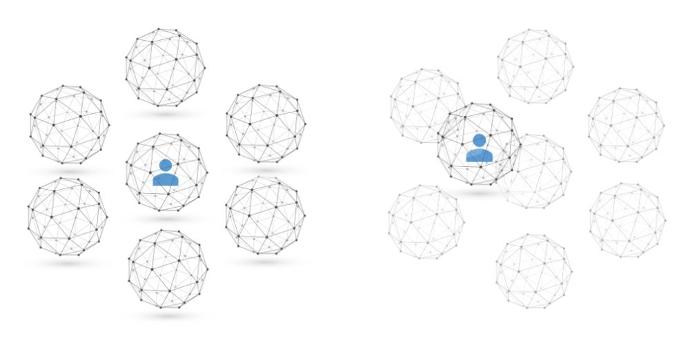

- Experience the freedom of placing, moving, and rotating the listener’s perspective!

- Hear the effect of natural movement through a sound scene

- Immerse yourself in the possibility of a multi-point 360° audio and video experience – also during the live-stream

- Capture large sound scenes simultaneously with an immersive multi-microphone setup

- Synchronize the playback of multiple Higher Order Ambisonics files

-

Enjoy the highly customizable integration with game engines

Frequently Asked Questions regarding the ZYLIA 6DoF Navigable Audio solution:

Our internal experiments and listening tests have shown that the optimal distance between the microphones is 1.5 to 2 meters. This placement gives the best results regarding interpolation and sound source localization. However, putting the microphones up to 10 meters from one another can still give satisfactory spatial resolution. Of course, still, the smaller distance there is, the higher spatial resolution can be achieved.

It all depends on the creative idea and effects you want to achieve.

To create a 6DoF audio experience we need to use several Ambisonics microphones to record the sound scene from many different perspectives. For a proper 6DoF playback, all these recordings need to be synchronized. To achieve that, in the software, they are trimmed at the beginning - so that they start exactly at the same moment, and if necessary, they are resampled – so that the ends match.

The 3-meter micro USB cables can be connected with 10-meter long USB extensions, which can be connected to the active USB hub and to another 10-meter USB extension, approximately 23 meters (75.5 feet).

The 6 DoF audio can be achieved only with the ZM-1S microphone because only this model supports external synchronization. Each ZM-1S microphone has 20 audio channels – 19 for recording signals from microphone capsules and 1 for receiving the analog synchronization impulses (the mini-jack input). Additionally, the ZM-1S contains a dedicated chip that connects multiple ZM-1S through the USB interface in a single computer. It is possible to connect around 10 ZM-1S to a single USB 2.0 interface, because of the data transfer speed limit. However, if a computer has more USB controllers, then it is possible to aggregate more signals.

ZYLIA ZM-1S microphone array has an additional input for analog time code so it’s easy to synchronize all the microphone arrays. You can also connect external recording systems. It is, however, worth pointing out that ZYLIA is not just a microphone, but it is actually an audio card too. It means that, if you are sending a time code into the audio card in a setup of, for example, ten microphones, you need to think of it like it is ten audio cards you need to time synchronize.

In the ZYLIA 6DoF Recording application, we can do live monitoring on every array. This is done in a way that we can listen from the two opposite capsules of the chosen microphone.

Yes, the LED ring light on the microphone can be dimmed or completely switched off if necessary.

There are several advantages of using the ZYLIA 6DoF production system instead of traditional solutions. One of them is lower processing power consumption because we are using only live-recorded audio without doing any simulations. Another great benefit is the faithful reproduction of the real acoustics of the place, which can be precisely captured with the ZYLIA microphones. Because it is so accurate, it creates a truly immersive feeling, especially since the system delivers high-resolution, spatial audio. It is a very detailed representation of the sound sphere, allowing a listener to quickly locate all sounds that surround them.

However, the most significant thing in working with Higher Order Ambisonics is that you can prepare the main content and later mix it down or render it in all sorts of formats that allow many different experiences. It can be, for instance, static binaural rendering or interactive 3D audio.

With the 6DoF tool, we can really navigate in space and move our listeners towards the aspect of musical presentation that we want to highlight.

Also, when we record with a Higher-Order Ambisonics (HOA) microphone, and we are close enough to a source, the instrument, for instance, is not a point source anymore. If we have a spot microphone, the source is also a spot source. But with the HOA microphone, the source suddenly has spatial resolution and can be heard differently from different perspectives.

The accuracy of mapping depends on the accuracy of the measurements made during the recording session. We recommend measuring the distance between all the microphones on the recording set and using these data to create a grid that can be later mirrored in the software.

It is possible to compress the files after recording, using, for example, OGG compression. This should decrease their size by 10 to 20 times. These compressed files can be used in the VR application. On our website, two versions of the VR application are available with Poznań Philharmonic Orchestra recordings: one with uncompressed and the other with compressed audio. The compressed version weighs around 3 GB, which is significantly lighter than the full version.

ZYLIA 6 Degrees of Freedom Higher Order Ambisonics Renderer is a MAX/MSP plugin available for MAC OS and Windows. It allows processing and rendering of the ZYLIA Navigable 3D Audio content. With this plugin, users can play back the synchronized Ambisonics files, change the listener’s position, and interpolate multiple Ambisonics spheres. The plugin can be connected with Unity or Unreal through OpenSound Control (OSC). However, it is also available in the Wwise plugin, which allows developers to use 6DoF audio in various game engines. In the Wwise version, the connection is established through RTCP.

In this case, we recommend using Wwise from Audiokinetic. It supports up to 6th-order Ambisonics. ZYLIA 6DoF Renderer is available in the Wwise version and perfectly integrates with Unity or Unreal engine. Wwise is a professional tool that is used not only in games production and VR but also in movie production.

For editing and mixing 6DoF content, we can use any DAW that supports multichannel tracks (at least 16), for example, Reaper. However, the playback requires using the MAX/MSP plugin, which can be connected with DAW through OpenSound Control (OSC). That will allow sending messages with the information about the listener's position from Reaper to MAX/MSP and creating a path.

If we would like to have multiple 360° views with corresponding 360° audio in each of them, we would have to make as many HOA mixes as we have cameras. The other use case would be using the same Ambisonics mix in every camera. The workflow generally creates the necessary number of HOA mixes, editing all the videos, and combining the audio and video.

Yes, the interpolation algorithm works in the sample by sample processing.

Yes, Zylia plugins are compatible with any audio system that operates in the ambisonics signal domain.

The CPU usage during rendering is low. For example, you can use it as a rendering plugin on a smartphone.

ZYLIA 6DoF Navigable Audio Orchestra

https://www.zylia.co/zylia-6dof-vr-orchestra-pc.html

This application uses the ZYLIA 6DoF rendering algorithm, where it simultaneously interpolates signals from 30 3rd-order ambisonics sources, that is, 600 channels. Applications works on the Oculus platform that is, Android phone.

You can also learn more about the computational complexity of ZYLIA 6DoF here:

https://www.youtube.com/watch?v=Sm6RV8MrXcU

Yes, that’s the main purpose of the renderer. ZYLIA 6DoF renderer

https://www.zylia.co/zylia-6dof-hoa-renderer.html

operates in the ambisonics domain. That is, it takes multiple Ambisonics signals as inputs. Inputs can be both recorded or synthetically generated. Next, the renderer produces a single ambisonics signal in a given position:

https://www.youtube.com/watch?v=1J4_0UhrJjM

Any playback or decoding system can process the signal from the ZYLIA 6DoF renderer as long as it operates in the ambisonics domain. That is,

2-channel binaural, using any ambisonics to a binaural decoder or Multichannel loudspeaker setup, using ZYLIA Studio PRO plugin or any other ambisonics to loudspeakers decoder, e.g., ALLRAD from the IEM plugins.

ZYLIA Studio PRO also allows the creation of even irregular distribution of the loudspeakers on the sphere.

Right now, video-sharing platforms like Facebook or YouTube do not allow each viewer to navigate individually through the audio scene. This kind of fully immersive, navigable experience can be achieved through the VR application for a smartphone, PC, or VR headset.

However, we can share multi-point 360° audio-video content on Facebook or YouTube. In this case, multi Ambisonics capture points would be combined with multi 360° cameras. The viewer would be switched between the cameras by an operator, but they would be able to rotate and look around in each spot by themselves. The sound they hear wound of course, correspond with that movement.

3DoF VR headset allows a person to look around in the VR environment by rotating their head along 3 axes (yaw, pitch, and roll). However, you may use a controller or touchpad to include translational movements in your experience as well (sway, heave, surge) and in this way move freely around the space.

During rendering we use all of the inputs, but even though the CPU cost is very small comparing to the object-based sound. The algorithm doesn’t contain any heavy operation like ray-tracing or reflection simulation. This allows reproducing the realistic sound even on limited platforms like Oculus Quest. The application which uses 30 signals from the recordings of the Poznan Philharmonic Orchestra is available on the Oculus App Lab. Also, we are preparing a demonstration where we compare the 6DoF approach with the object-based sound in games. The more sound objects you have the higher usage of CPU is, but in our approach, the CPU usage is constant, independent of the number of objects. Overall, our algorithm consumes about 100 to 10.000 times less CPU than the object-based approach.

SUBSCRIBE TO ZYLIA NEWSLETTER!

Sign up for a free newsletter. Stay informed about audio field content, new products, software updates and promotions.